Upgrade Your Drupal Skills

We trained 1,000+ Drupal Developers over the last decade.

See Advanced Courses NAH, I know EnoughThe most impactful customer experiences are data-driven. CDPs help organizations collect, unify, manage, and analyze customer data. This allows businesses to understand their customers better and deliver personalized digital experiences.

But selecting and implementing a CDP is not a straightforward journey. It’s a digital transformation that begins with organizations understanding what a CDP can do for them and how they can utilize the platform to their advantage.

Evaluating If You Need A CDP

Every organization likes to think they know their customers, but most are missing out. According to a study conducted by Mapp, an international provider of insight-led customer engagement, lack of customer insight is the biggest challenge in providing personalized experiences.

Successfully implementing a CDP can help fix this challenge. Submit this form to find out if your organization needs a CDP.

The CDP Implementation Framework: Everything From Discovery To Implementation & Enablement

Implementing a Customer Data Platform (CDP) involves a structured approach encompassing discovery, strategy, implementation, and enablement.

Stage 1: Discovery

During the discovery phase, it's essential to gain a clear understanding of your organization's data landscape, business goals, and available resources. Key aspects include:

1. Data Maturity And Business Goals

To assess data maturity, consider the following:

- Volume: How much data does your organization generate and collect?

- Variety: What types of data are available (e.g., customer demographics, transaction history)?

- Velocity: How quickly is data generated and updated?

- Veracity: How reliable and accurate is the data?

- Value: What insights can be derived from the data to drive business decisions?

For example, a retail company may have vast amounts of transaction data but lack comprehensive customer profiles.

2. Data Inventory And Quality Assessment

Cataloging existing data sources involves identifying where data resides, such as:

- Customer Relationship Management (CRM) systems

- Enterprise Resource Planning (ERP) systems

- Web analytics platforms

Assessing data quality entails evaluating data accuracy, completeness, and consistency. For instance, organizations may discover inconsistencies in customer information across different databases, impacting marketing campaigns' effectiveness.

3. Stakeholder Engagement And Workflow

Engage stakeholders from various departments (e.g., marketing, sales, IT) to understand their data needs and ensure alignment with business objectives. Establish clear roles and responsibilities, such as:

- Data stewards responsible for data governance

- Analysts tasked with generating insights from customer data

Define workflows to streamline data collection, processing, and analysis. For example, establish protocols for updating customer profiles and sharing insights across teams.

Key Questions To Ask

Ask relevant questions to guide the discovery process.

Project & Business Objectives

- What are the specific goals for this CDP implementation?

- What problem are you trying to solve with the CDP?

- What are your business goals and how does the CDP align with them?

- What would be considered a success for the CDP implementation?

- What are the current solutions and how can CDP improve them?

Budget & Timelines

- What is the budget for the CDP implementation?

- Is there a fixed deadline or time constraints for the project?

- Are there any financial limitations that need to be considered?

Identify Stakeholders

- Who are the primary stakeholders in this CDP project?

- What are the needs and expectations of each stakeholder?

- How will different stakeholders be involved in the project?

Data Sources & Quality

- What are the sources of your customer data?

- What is the quality and maturity of the data you currently have?

- Who owns the data and where does it come from?

Segmentation & Identification Strategy

- How will you segment your customer data?

- What is your strategy for identifying and resolving customer identities?

The Action Plan & Expectations

- What are the most impactful outcomes you expect from the CDP?

- How do you plan to monitor, measure, and capture the value of the CDP?

Ensure answers to all of these questions to develop an effective CDP implementation strategy.

Stage 2: Strategy

The strategy phase focuses on developing a comprehensive plan to leverage the CDP effectively. Key considerations include:

1. Data Strategy Development

Define a data strategy aligned with business objectives and use cases. For example:

- Personalization: Use customer data to deliver personalized experiences and targeted marketing campaigns.

- Customer Retention: Identify at-risk customers and implement strategies to improve retention rates.

Plan implementation phases in a "crawl, walk, run" approach to prioritize high-impact use cases and ensure gradual adoption across the organization.

2. Customer Data Landscape Assessment

Assess the customer data landscape by identifying all data sources and their integration capabilities. Consider:

- Online & Offline Data: Integrate data from online sources (e.g., website interactions, social media) and offline sources (e.g., in-store purchases, call center interactions).

- Identity Resolution: Develop a strategy for resolving customer identities across different data sources to create unified customer profiles.

Define the universal data layer, including data points and naming conventions, to ensure consistency in data collection and storage.

3. Stakeholder Engagement & Collaboration

Ensure alignment across departments and stakeholders by:

- Hosting workshops and collaborative sessions to gather input from various teams.

- Establishing a governance structure to manage data access, usage, and privacy.

- Define workflows and data ownership responsibilities to streamline collaboration and decision-making processes.

Stage 3: Implementation

During the implementation phase, the focus shifts to executing the plan and mapping data to the CDP effectively. Key steps include:

1. Data Mapping & Integration

Map data from various sources to the CDP to create a unified view of customer data. For example:

- Integrate CRM data to track customer interactions and purchase history.

- Combine website analytics data to understand customer behavior and preferences.

- Implement identity resolution to merge duplicate customer records and create accurate customer profiles.

2. Identity Resolution

An important part of data implementation is identity resolution (IR). When there's a surge of customer data coming in from various channels, it can be quite challenging to filter through everything. An easy solution is if you have a Customer Data Platform (CDP).

Imagine records like this:

These three records are of the same person only. But more basic systems might mix the data up and create three separate Dracos. A CDP will use its IR powers to consolidate an example like this into a single customer profile. The resolved record would complete Mr. Malfoy’s profile.

Stage 4: Enablement

The enablement phase involves preparing for operational deployment and deriving value from the CDP. Key activities include:

1. Provisioning & Training

Set up the production environment and train users on CDP functionalities, including:

- Data ingestion and integration processes

- Segmentation and targeting capabilities

- Reporting and analytics features

Build segments, reports, and personalized campaigns to leverage customer data effectively in marketing initiatives.

2. Iteration & Scaling

Start with small, manageable goals for quick wins and iterate based on feedback and insights from the CDP. For example, test different segmentation strategies to identify high-value customer segments.

Users can also analyze campaign performance metrics to optimize targeting and messaging. Scale up over time by expanding the use of the CDP across departments and incorporating additional data sources and use cases.

Conclusion

Do you need more help in building a strategic roadmpa for a CDP implementation? Or are you not even sure if opting for a CDP is the right solution?

Submit the form to get personalized recommendations from our CDP experts.

Network, Learn, and Collaborate - The three key motivations for individuals and organizations to participate in conferences. Every regular conference has a theme or niche that serves as a focal point for discussions and advancement. These events serve as stages for personal branding and business promotion, with attendees aiming to gain insights and contacts that directly benefit their individual goals and organizational interests.

Although open-source events rely on these key motivations too, they have a unique flavor of community spirit and collaboration that’s not found in traditional conferences. Open source events like DrupalCons thrive on shared knowledge, transparent innovation, and a sense of collective growth.

What is DrupalCon?

DrupalCon is an annual open-source conference that brings together open-source enthusiasts, developers, designers, and end users for networking, learning, and collaboration, all under one roof. This is where you can meet the people who made the software, get inspired, and actively contribute to the project. The next upcoming DrupalCon North America event is being held in Portland, Oregon, from 06 May 2024 to 09 May 2024. We’ll give you some reasons why you should attend open-source events like DrupalCon 2024.

Benefits of Attending Open Source Conferences

An open-source enthusiast knows that events like DrupalCons are celebrations of community-driven innovation. The energy is contagious, the ideas are limitless, and the camaraderie extends beyond the conference halls.

Spirit of Open-Source

Open source is almost synonymous with collaboration. Collaboration by contributors who are the heartbeat of any open-source project. These events provide a platform for individuals and organizations to come together, contribute to the community, and drive the future of open source. It aligns with the open-source commitment to empowering innovation through the collective efforts of a vibrant and engaged community. In an event like DrupalCon, you get a chance to meet people who are passionate about Drupal and driving it forward.

Career Boost

If you're launching your career or contemplating a switch to something more fulfilling, few experiences rival the rewards of joining an open-source community. And there’s no better place to kick off this journey than an open-source conference. You’re not just exploring job opportunities but also gaining the knowledge you need from training sessions and meaningful interactions with seasoned experts. You can also upgrade your skills through hands-on workshops and interactive sessions at the event. At DrupalCon, you can always find support if you’re new to the world of Drupal or Open source. A mentor will help guide you through your entire experience by suggesting what sessions you should attend for your professional development. You can even learn to make your first contribution to the project through your mentor.

Spot the Trend

Want to know what’s new in your area of interest? Open-source conferences are the best places to identify emerging trends, innovations, and shifts in the industry - much before they become mainstream! You come out well-equipped with insights into upcoming technologies and initiatives. This will not only help you in your professional development but also enable you to contribute meaningfully to innovative projects. All of this ultimately leads to improved user experiences and future-ready applications. At DrupalCon, immerse yourself in firsthand insights as Dries Buytaert, the founder himself, shares the current state of Drupal in his keynote (DriesNote). Discover upcoming initiatives and innovation on the horizon, and get a sneak peek into the exciting developments set to launch.

The Power of Open Source Networking

We all know how powerful networking can be for your career or business development. But for an open-source community, networking is an indispensable aspect. It's impossible to have a successfully operating community without networking. Open-source events let you connect with like-minded individuals, developers, agencies, and contributors, fostering potential collaboration. Get mentorship, guidance, and exposure to new opportunities to aid your professional growth. Attend DrupalCon to connect with thousands of open-source enthusiasts and build meaningful connections with professionals just like you. Programs like BoFs (Birds of a Feather) at DrupalCon let you exchange information and share best practices around a common topic of interest. Make DrupalCon your opportunity to grow.

Real-World Learning

Learning from real-world scenarios truly refines your understanding of technology and innovation. Attending industry summits at open-source conferences is a great way to gain practical insights from industry leaders. It’s a chance to understand the real-world challenges faced by them and the practical solutions implemented. Through live demos, case studies, and applications, you can see the ropes in action. Industry summits often highlight the methodologies that are proving successful in the current landscape, providing actionable takeaways. DrupalCon has a full day dedicated to industry summits like the higher educational summit, non-profit summit, government summit and community summit.

Final Thoughts

Whether it's networking opportunities, hands-on learning, or trend forecasting, open-source conferences offer a holistic approach to staying on top of ever-changing technologies. They contribute to the collective growth of the entire open-source community. It's an investment in continuous learning, professional enrichment, and the boundless possibilities of open collaboration. Did we mention that DrupalCons aren't just about coding and tech talk? There's a ton of fun to be had too! Take a look at the social events from last year.

Data plays a pivotal role in shaping customer interactions, so implementing a CDP can empower businesses to unify and analyze customer data from various sources. This can lead to more personalized and effective marketing strategies, improved customer segmentation, and enhanced digital experiences.

However, diving into CDP implementation without proper assessment can lead to challenges such as underutilization of the platform, data management issues, and inadequate return on investment. To avoid these challenges, organizations must comprehensively evaluate their readiness across various dimensions before embarking on a CDP implementation journey.

Evaluating Readiness For CDP Implementation

Before implementing a Customer Data Platform (CDP), organizations must establish several prerequisites to ensure a successful integration and utilization of the platform.

- Ensure a clear data strategy, outlining objectives, sources, and governance frameworks aligned with broader business goals.

- Ensure good data quality through cleansing and validation for accurate insights and decision-making.

- Assess and enhance the technology infrastructure required to support a CDP integration.

- Foster cross-functional collaboration, secure stakeholder buy-in, allocate resources, and develop a comprehensive change management plan.

Once these prerequisites are in place, the next step is to conduct a thorough evaluation across different dimensions.

Do You Need A CDP

Assess your organization across the following dimensions to evaluate the need for a CDP.

1. Data Maturity & Infrastructure

Assess your organization's data management capabilities and infrastructure readiness for CDP integration.

- Current Data Sources

Identify existing data sources and evaluate their formats, accessibility, and captured data.

- Integration Capability

Assess the ability to integrate data sources with a CDP, considering API availability and compatibility.

- Data Quality & Consistency

Ensure data accuracy, reliability, and adherence to governance and compliance standards.

Organizations with scattered data across various platforms and systems who wish to enable better decision-making should consider implementing a CDP. On the other hand, organizations with limited data sources do not need a CDP and should consider a different solution depending on their goal.

2. Organizational Readiness

Evaluate your organization's readiness beyond technology.

- Stakeholder Alignment

Ensure key stakeholders understand and support the CDP initiative.

- Skillset Availability

Assess if your team has the necessary skills for CDP implementation or if training/new hires are needed.

- Technology Stack Compatibility

Evaluate compatibility with existing technology and potential upgrades.

Organizations where multiple stakeholders across departments need access to unified customer data for decision-making should consider opting for a CDP. On the other hand, organizations with a unified vision for utilizing existing data sources and access to the right technology infrastructure for supporting data integration can do without a CDP.

3. Future Scalability

Consider the scalability of your CDP implementation.

- Scalability Assessment

Evaluate scalability to accommodate future business growth.

- Flexible Architecture

Ensure the CDP architecture can adapt to evolving data sources and business needs.

Implementing a CDP is a great idea for organizations anticipating significant customer data volume and complexity growth. It is also ideal for organizations anticipating the adoption of new technology and data sources in the future.

Businesses anticipating minimal growth in customer data volume and with a stable industry can look into other avenues for building better customer experiences.

4. Change Management

Address organizational changes associated with CDP implementation.

- Culture Of Data-Driven Decision Making

Foster an environment where decisions are based on data insights.

- Process Integration

Ensure seamless integration with existing processes to make data insights actionable.

- Change Management Strategies

Implement strategies to manage organizational transitions smoothly.

Organizations transitioning to a data-driven culture with the necessary stakeholder approvals, training, and support are usually in a great position to implement a CDP. Organizations with this culture or those not looking to change their current processes and systems should refrain from implementing a CDP.

5. Resource Availability

Ensure the availability of resources for successful CDP implementation.

- Technical Expertise

Assess the need for skilled data management, integration, and analysis personnel.

- Infrastructure

Ensure adequate technological infrastructure to support CDP requirements.

Allocate the budget for initial implementation, maintenance, and updates.

Implementing and managing a CDP requires specialized skills and expertise. Organizations with this expertise or are willing to invest in it should consider implementing a CDP.

6. Data Democratization

Promote accessibility and understanding of data across the organization.

- User-Friendly Tools

Implement tools for easy data access and interpretation.

- Data Governance

Establish clear policies on data access, usage, and security.

- Training & Literacy

Provide training to improve data literacy across the organization.

Organizations looking to unify customer data for decision-making should consider implementing a CDP.

7. Technology Compatibility & Integration

Ensure seamless integration with existing technology platforms.

- Existing Tech Stack Assessment

Evaluate compatibility with current systems.

- API & Data Exchange Capabilities

Ensure seamless data exchange with other systems.

Organizations looking to change their existing technology platforms that operate in silos and hinder data exchange and integration should consider opting for a CDP. Businesses with existing technology platforms that operate cohesively allow seamless data exchange and integration without needing a specialized platform like a CDP.

8. Use Case Definition & Business Goals

Align CDP implementation with business objectives.

- Clear Use Cases

Identify specific use cases for the CDP.

- Alignment with Business Objectives

Ensure CDP directly contributes to achieving key business goals.

Implementing a CDP is a good idea when businesses require advanced data analysis capabilities, such as personalized marketing or real-time analytics, that cannot be achieved using existing tools. It can also help segment customers based on specific criteria and personalize marketing strategies.

9. Compliance & Data Governance

Ensure compliance with data privacy regulations and robust governance.

- Data Privacy & Security

Confirm compliance with data protection regulations.

- Audit & Reporting Requirements

Support necessary audit trails and reporting for compliance.

Organizations operating in highly regulated industries or globally must comply with necessary data governance and compliance regulations such as GDPR and CCPA.

10. Budget & ROI Consideration

Evaluate the financial aspects of CDP implementation.

- Cost-Benefit Analysis

Understand the total cost of ownership and expected ROI.

- Long-Term Financial Commitment

Consider long-term maintenance and scaling costs.

Implementing a CDP is beneficial when the potential benefits, such as improved customer engagement, increased sales, and enhanced marketing effectiveness, outweigh the initial investment and ongoing costs.

11. Vendor Evaluation

Select a suitable CDP vendor.

- Market Research

Conduct thorough research on potential CDP vendors.

- Proof Of Concept

Consider running a pilot program to test effectiveness.

Customer support and assistance are crucial for successful CDP implementation and ongoing maintenance. Ensure a thorough vendor evaluation when choosing a reputable and reliable CDP vendor.

12. Scalability & Future-Proofing

Ensure CDP scalability and adaptability.

- Scalability Assessment

Ensure CDP can scale with business growth.

- Adaptability To Future Trends

Ensure CDP can adapt to future data trends and technological advancements.

A scalable CDP solution is necessary when businesses anticipate significant data volume and complexity growth. A CDP can help adapt to future data trends and technological advancements.

Submit The Form To Get Personalized CDP Recommendations

Assessing readiness across these areas ensures successful CDP implementation and effective utilization in driving business growth and enhancing customer experiences. You can also submit this form to determine if you need a CDP or speak to our experts about your business requirements and goals.

We're delighted to introduce Imre Gmelig Meijling, one of the newest members elected in October of the Drupal Association Board. Imre, CEO at React Online Digital Agency in The Netherlands, brings a wealth of digital experience from roles at organizations like the United Nations World Food Programme, Disney, and Port of Rotterdam.

Imre is not only a member of the Drupal Association Board of Directors but also serves as an executive member on the DrupalCon Europe Advisory Committee. Previously, he chaired the Dutch Drupal Association, expanding marketing efforts and establishing a successful Drupal Partner Program. Imre played a key role in launching drupal.nl, a community website used by several countries. He co-created the Splash Awards and led Drupaljam, a Dutch Drupal event with almost 500 attendees. In 2023, Imre joined the Drupal Business Survey.

As a recent board member, Imre shares insights on this exciting journey:

What are you most excited about when it comes to joining the Drupal Association board?

I am very excited about joining the Drupal Association Board and contributing with insights and perspectives from the digital business market in Europe. Drupal has a strong market position with many opportunities for the coming years. I look forward to supporting the marketing team in their expanding efforts. I am particularly proud and excited to be part of an inclusive global community. Being part of an inclusive global community and supporting the Open Web Manifesto aligns closely with my personal values.

What do you hope to accomplish during your time on the board?

I aim to help expand Drupal's marketing outreach aiming for more wonderful brands and organizations adopting Drupal and attracting new talent to get involved with Drupal. I am also looking forward to establishing and sustaining relationships between Europe and other regions with the Drupal Association and finding ways to work even more closely together.

What specific skill or perspective do you contribute to the board?

Being part of an inclusive global community and supporting the Open Web Manifesto aligns closely with my personal values. Working with Drupal at various digital agencies in Europe, I support the growth of Drupal from a business-perspective, but having a technical background, I know the strength of the Drupal community has and can be for brands. Having been in both worlds for a long time, I will help and make sure we bring them together.

I was Chair of the Board for the Dutch Drupal Association, in which time a successful Dutch Partner Program was launched. Also, marketing and advertising on mainstream media was taking off during that time. I was also involved in the design and setup of the Dutch Drupal website, which is now open source. I co-founded the Splash Awards and I am Executive Member of the DrupalCon Europe Community Advisory Committee. I will share all of my experiences where I can.

How has Drupal impacted your life or career?

It's part of my life, both professional as well as personal, for over 16 years.

Tell us something that the Drupal community might not know about you.

I own my own digital agency in The Netherlands, React Online. I began my career as a UX designer and front end developer for Lotus Notes applications, called 'groupware' at the time, a long gone predecessor to the social collaboration platforms that we now know well. Interestingly, my birthday is on January 15, just like Drupal!

Share a favorite quote or piece of advice that has inspired you.

A true leader is not one with the most followers, but one who makes the most leaders out of others. A true master is not the one with the most students, but one who makes masters out of others.

We can't wait to experience the incredible contributions Imre will make during his time on the Drupal Association Board. Thank you, Imre, for dedicating yourself to serving the Drupal community through your board work! Connect with Imre on LinkedIn.

The Drupal Association Board of Directors comprises 12 members, with nine nominated for staggered 3-year terms, two elected by the Drupal Association members, and one reserved for the Drupal Project Founder, Dries Buyteart. The Board meets twice in person and four times virtually annually, overseeing policy establishment, executive director management, budget approval, financial reports, and participation in fundraising efforts.

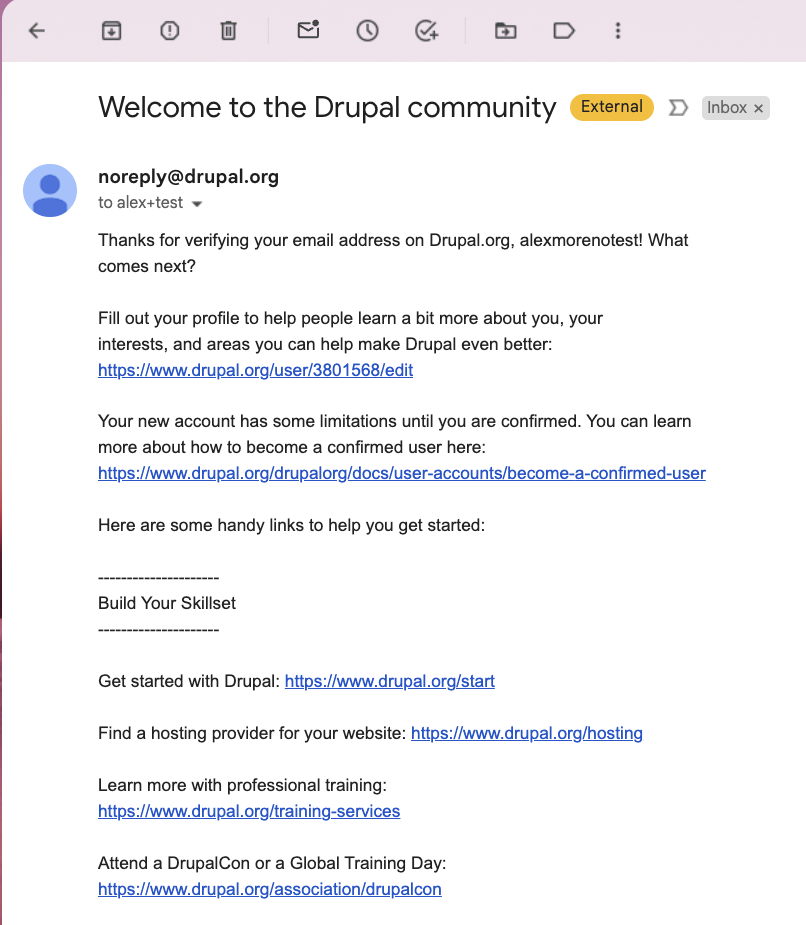

We have made a recent update on drupal.org that you haven’t probably noticed. In fact, although it's a meaningful and important section, I bet you have not seen it in months or even years. It's something you would have only seen when you registered on Drupal.org for the first time. I’m talking about the welcome email for new registered users.

One of the goals we have had recently is improving the way our users register and interact with drupal.org for the first time. Improvements to onboarding should improve what I call the long tail of the (Open Source) contribution pipeline (a concept, the long tail of contribution, that I will explain further in the next few days).

For now, let’s have a look at one of the first things new users in our community saw first:

This is what I called the huge wall of text. Do you remember seeing it for the first time? Did you read any of it at all? Do you remember anything important in that email? Or did you just mark it read and move on?

Fortunately, we've taken a first, incremental step to make improvements. As I said before, this isn't something our existing userbase will see, but the new welcome email to Drupal.org has changed and been simplified quite a bit. Here is the new welcome email:

We have replaced a lot of the wall of text with a simpler set of sections and links, and landing pages on drupal.org. This simplifies the welcome email, but is also going to allow us to track which links are most useful to new users, how many pages they visit on drupal.org, where do they get stuck, what interests them most, etc - and use that to make further refinements over time.

The other section I wanted to include is something that is very important for the Drupal Association, but also for the whole community. I wanted to highlight the contribution area, something that was not even mentioned in the old email. Our hope is this is an opportunity to foster and promote contribution from new users.

A few weeks ago I also launched a poll around contribution. This poll combined with the updates on these few changes in the user registration are aimed towards the same goal: improving contribution onboarding. You can still participate if you’d like to, just visit https://www.surveymonkey.com/r/XRTNNM3

Now, if you are curious about what I am calling the long tail of the contribution pipeline, watch this space.

File attachments: confirmed-user.pngOn the two-year anniversary of the Russian government’s attack on Ukraine, the Drupal Association wishes to reiterate its support for Ukraine. The invasion was an act of aggression, and our hearts are still with our Drupal Ukraine community.

We want to bring attention once more to the ways that the Drupal community can continue to support Ukraine. Here is a list of organizations* accepting donations to help people directly affected by the events in Ukraine:

-

Nova Ukraine, a Ukraine-based nonprofit, provides citizens with basic needs and resources. Donate here.

-

United Help Ukraine receives and distributes donations, food, and medical supplies to internally displaced Ukrainians and anyone affected by the war. Donate here.

-

People in Need provides humanitarian aid to over 200,000 people on the ground. Donate here.

-

The Ukrainian Red Cross undertakes humanitarian work, from aiding refugees to training doctors. Donate here.

-

UN Refugees Agency supports refugees. Donate here.

-

UNICEF Ukraine is repairing schools damaged by the bombings and providing emergency responses to children affected by the war. Donate here.

As always, our global Drupal community is better together. We stand in solidarity and hope for peace.

*List of resources originally compiled by Global Citizen

Routes in Drupal can be altered as they are created, or even changed on the fly as the page request is being processed.

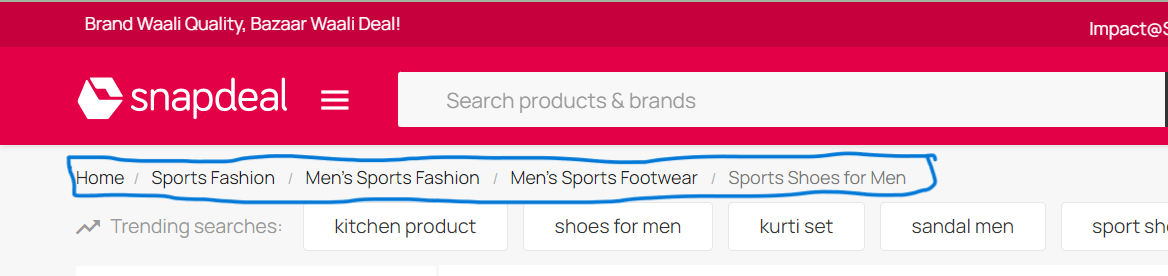

In addition to a routing system, Drupal has a path alias system where internal routes like "/node/123" can be given SEO friendly paths like "/about-us". When the user visits the site at "/about-us" the path will be internally re-written to allow Drupal to serve the correct page. Modules like Pathauto will automatically generate the SEO friendly paths using information from the item of content; without the user having to remember to enter it themselves.

This mechanism is made possible thanks to an internal Drupal service called "path processing". When Drupal receives a request it will pass the path through one or more path processors to allow them to change it to another path (which might be an internal route). The process is reversed when generating a link to the page, which allows the path processors to reverse the process.

It is possible to alter a route in Drupal using a route subscriber, but using path processors allows us to change or mask the route or path of a page in a Drupal site without actually changing the internal route itself.

In this article we will look what types path processors are available, how to create your own, what sort of uses they have in a Drupal site, and anything else you should look out for when creating path processors.

Types Of Path Processor

Path processors are managed by the Drupal class \Drupal\Core\PathProcessor\PathProcessorManager. When you add your a path processor to a site this is the class that manages the processor order and calling the processors.

There are two types of path processor available in Drupal:

- Inbound - Processes an inbound path and allows it to be altered in some way before being processed by Drupal. This usually occurs when a user sends a request to the Drupal site to visit a page. Inbound path processors can also be triggered by certain internal processes, for example, when using a path validator. The path validator will pass the path to the inbound path processor in order to change it to ensure that it has been processed correctly.

- Outbound - An outbound path is any path that Drupal generates a URL. The outbound path processor will be called in order to change the path so that the URL can be generated correct.

Basically, the inbound processor is used when responding to a path, the outbound processor is called when rendering a path.

Let's go through a couple of examples of each to show how they work.

Creating An Inbound Processor

To register an inbound service with Drupal you need to create a service with a tag of path_processor_inbound, and can optionally include a priority. This let's Drupal know that this service must be used when processing inbound paths.

It is normal for path processor classes to be kept in the "PathProcessor" directory in your custom module's "src" directory.

services:

mymodule.path_processor_inbound:

class: Drupal\mymodule\PathProcessor\InboundPathProcessor

tags:

- { name: path_processor_inbound, priority: 20 }The priority you assign to the path_processor_inbound tag will depend on your setup. The internal inbound processor that handles paths in Drupal has a priority of 100, so any setting less than 100 will cause the processing to be performed before Drupal's internal handler is called.

The InboundPathProcessor class we create must implement the \Drupal\Core\PathProcessor\InboundPathProcessorInterface interface, which requires a single method called processInbound() to be added to the class. Here are the arguments for that method.

- $path - This is a string for the path that is being processed, with a leading slash.

- $request - In addition to the path, the request object is also passed to the method. This allows us to perform any additional checks on query strings on the URL or other parameters that may have been added to the request.

The processInbound() method must return the processed path as a string (with the leading slash). If we don't want to alter the path then we need to return the path that was passed to the method.

To create a simple example let's make sure that when a user visits the path at "/some-random-path" that we translate this internally to be "/node/1", which is not the internal route for this page. In this example, if the path passed into the method isn't our required path then we just return it, effectively ignoring any path but the one we are looking for.

Now, when the user visits the path "/some-random-path" they will see the output of the page at "/node/1". It is still possible to view the page at "/node/1/" and see the output, so we have just created a duplicate path for the same page.

This is a simple example to show how the processInbound() method works, we'll look at a more concrete example later.

Creating An Outbound Processor

The outbound processor is defined in a similar way to the inbound processor, but in this case we tag the service with the tag path_processor_outbound.

services:

mymodule.path_processor_outbound:

class: Drupal\mymodule\PathProcessor\OutboundPathProcessor

tags:

- { name: path_processor_outbound, priority: 250 }The priory of the path_processor_outbound is more or less the opposite of the inbound processor in that you'll generally want your outbound processing to happen later in the callstack. The internal Drupal mechanisms for outbound processor is set at 200, so setting our priory to 250 means that we process our outbound links after Drupal has created any aliases.

The OutboundPathProcessor class we create must implement the \Drupal\Core\PathProcessor\OutboundPathProcessorInterface interface, which requires a single method called processOutbound() to be added to the class. Here are the arguments for that method.

- $path - This is a string for the path that is being processed, with a leading slash.

- $options - An associative array of additional options, which includes things like "query", "fragment", "absolute", and "language". These are the same options that get sent to the URL class when generating URLs and allow us to update the outbound path based on the passed options.

- $request - The current request object is also sent to the method and can make decisions based on the parameters passed to the current path.

- $bubbleable_metadata - An optional object to collect path processors' bubbleable metadata so that we can potentially pass cache information upstream.

The processOutbound() method must return the new path, with a starting slash. If we don't want to change the path then we just return the path that was sent to us, otherwise we can make any change we require and return this string.

Taking a simple example in the inbound processor further, let's change the path "/node/1" to be "/some-random-path". In this example we are looking for the internal path of "/node/1", and if we see this path then we return our new path.

With this in place, when Drupal prints out a link to "/node/1" it will render the path as "/some-random-path".

On its own this example doesn't do much; we are just rewriting a path for a single page. The real power is when we combine inbound processing and outbound processing together. Let's do just that.

Creating A Single Class For Path Processing

It is possible to combine the inbound and outbound processors together into a single class by combining the tags in a single service. This can be done by combining the path processors together in the module's services file.

services:

mymodule.path_processor:

class: Drupal\mymodule\PathProcessor\MyModulePathProcessor

tags:

- { name: path_processor_inbound, priority: 20 }

- { name: path_processor_outbound, priority: 250 }

The class we create from this definition implements both the InboundPathProcessorInterface and the OutboundPathProcessorInterface, and as such it includes both of the processInbound() and processOutbound() methods.

Now all you need to do is add in your path processing.

It's a good idea to create a construct like this so that you translate the path going into and coming out of Drupal. This creates a consistent path model and prevents duplicate content issues where different pages have the same path.

The Redirect Module

If you are planning to use the inbound path processor system then you should be aware that the Redirect module will attempt to redirect your inbound path processor changes to the rewritten paths. The Redirect module is a great module, and I install it on every Drupal site I run, but in order to prevent this redirect you'll need to do something extra, which we'll go through in this section.

To prevent the Redirect module from redirecting a path you need to add the attribute _disable_route_normalizer to the route before the kernel.request event triggers in the Redirect module's RouteNormalizerRequestSubscriber class. We do this by creating our own event subscriber and giving it a higher priority.

The first thing to do is add our event subscriber to our custom module services.yml file.

mymodule.prevent_redirect_subscriber:

class: Drupal\mymodule\EventSubscriber\PreventRedirectSubscriber

tags:

- { name: event_subscriber }

The event subscriber itself just needs to listen to the kernel.request event, which is stored in the KernelEvents::REQUEST constant. We need to trigger our custom module before the redirect module event, and so we set the priority of the event to be 40. This is higher than the Redirect module event, which is set at 30.

All the event subscriber needs to do is listen for our path and then set the _disable_route_normalizer attribute to the route if it is detected.

getRequest()->getPathInfo() === '/en/some-random-path') {

$event->getRequest()->attributes->set('_disable_route_normalizer', true);

}

}

}

When the Redirect module event triggers it will see this attribute and ignore the redirect.

This will only happen if you are changing the path of an entity of some kind using only the inbound path processor. Creating only the inbound processor creates an imbalance between the outer path and the translated inner path, which we then need to let the Redirect module know about to prevent the redirect. If we also translated the outbound path in the same (and opposite) way then the redirect wouldn't occur.

Doing Something Useful

We've looked at swapping paths and preventing redirects, but let's do something useful with this system.

I was recently tasked with creating a module that would allow any page to be rendered as RSS. It wasn't that we needed a RSS feed, but that each individual page should have an RSS version available.

This was required as there was an integration with an external system that was used to pull information out of a Drupal site for newsletters. Having RSS versions of pages made it much easier for the system to parse the content of the page and so produce the newsletter. This also meant that if the theme changed the system wouldn't be effected as it wouldn't be using the theme of the site.

Essentially, the requirement meant that we needed to add "/rss" after any page on the site and it would render the page accordingly.

The resulting module was dubbed "Node RSS" and made extensive use of path processors to produce the result.

The first step was to create a controller that would react to path like "/node/123/rss" to render the page as an RSS feed. This required a simple route being set up to allow Drupal to listen to that path and also to inject the current node object into the controller. The route also contains a simple permission, which provided a convenient way of activating the system when it was ready.

node_rss.view:

path: '/node/{node}/rss'

defaults:

_title: 'RSS'

_controller: '\Drupal\node_rss\Controller\NodeRssController::rssView'

requirements:

_permission: 'node.view all rss feeds'

node: \d+

options:

parameters:

node:

type: entity:node

The rssView action of the NodeRssController just needs to render the node and return it as part of an RSS document. Using this we can now go to a node page at "/node/123/rss" and see an RSS version of the page.

I won't go into detail about producing the RSS version of the page here as it contains a lot of boilerplate code that goes beyond the scope of this article.

So far we only have half the functionality required. Seeing an RSS version of the page via the node ID is fine, but what we really want is to visit the full path of the page with "/rss" appended to the end.

The next step is to setup our path processor so that we can change the paths on the fly. In addition to the tags we are also passing in two other services for us to use in the class. These services are the path_alias.manager service for translating paths and the language_manager to ensure that we get the path with the correct language.

services:

node_rss.path_processor:

class: Drupal\node_rss\PathProcessor\NodeRssPathProcessor

arguments:

- '@path_alias.manager'

- '@language_manager'

tags:

- { name: path_processor_inbound, priority: 20 }

- { name: path_processor_outbound, priority: 220 }The processInbound() method looks for the "/rss" string at the end of the passed path. If this is found then we remove that from the path and try to find the internal path of the page in the site. If we do find the path then it will be returned as "/node/123" instead of the full path alias and this means we can just append "/rss" to the end of the path to point the path at our NodeRssController::rssView action.

public function processInbound($path, Request $request): string {

if (preg_match('/\/rss$/', $path) === 0) {

// String is not an RSS feed string.

return $path;

}

$nonRssPath = str_replace('/rss', '', $path);

$internalPath = $this->pathAliasManager->getPathByAlias($nonRssPath, $this->languageManager->getCurrentLanguage()->getId());

if ($internalPath === $nonRssPath && preg_match('/^node\//', $internalPath) === 0) {

// No matching path was found, or, it wasn't a node path that we have.

return $path;

}

return $internalPath . '/rss';

}The opposite process needs to happen for the processOutbound() method. In this case we look for a path that looks like "/node/123/rss" and convert this back into the full path alias of the page. If we find an alias for that path then we append "/rss" to the path and return it.

public function processOutbound($path, &$options = [], Request $request = NULL, BubbleableMetadata $bubbleable_metadata = NULL): string {

if (preg_match('/^\/node\/.*?\/rss?$/', $path) === 0) {

// String is not an RSS feed string.

return $path;

}

$nonRssPath = str_replace('/rss', '', $path);

$alias = $this->pathAliasManager->getAliasByPath($nonRssPath, $this->languageManager->getCurrentLanguage()->getId());

if ($nonRssPath === $alias) {

// An internal alias was not found.

return $path;

}

return $alias . '/rss';

}We now have an RSS feed for any content path on the website (as long as it is a node page of some kind).

If we attempted to visit the RSS output of any other kind of page (like a taxonomy term) then we would receive a 404 error. This is possible thanks to the route we have in place as the parameter will only accept node paths.

As we have translated the path completely we do not need the Redirect module overrides here since there is a coherent input/output mechanism for these paths. It's only when there is an imbalance in the paths that we need to override the Redirect module to prevent redirects.

Don't worry if you are looking for the full source code for the above module as I have recently released the Node RSS module on Drupal.org. It only has a dev release for the time being as I would like to add the ability to pick what content types are available for the feeds. I'm also testing it with different setups to make sure that the feed works in different situations. Let me know if it is useful for you and please create a ticket if you have any issues.

If you want to see another module that makes use of this technique then there is the Dynamic Path Rewrites module. This allows the rewriting of any content path on the fly without creating path aliases. This is an alternative to using modules like Path Auto without actually creating path aliases within your system and uses a nice caching system to speed up the responses.

Conclusion

The path processing system in Drupal is really quite powerful and can be used to build some interesting features that rewrite paths on the fly. We can take any incoming request and redirect it to any path we like on the fly.

Without this system in place we would need to generate additional aliases for every path we wanted and add them to the database before we would be able to use the system. That is fine on smaller sites, but I manage sites with millions of nodes and that amount of data would bloat the database and probably not be used all that much.

Path processing does have some interactions with other modules (like Redirect) but these problems are easily overcome. Perhaps the most complex part of this is ensuring that you have the right weights to some of the interactions here as getting things wrong will likely lead to unwanted interactions.

Annual awards for the best Drupal projects

Last Friday, the prestigious #SplashAwards2023 took place in Mannheim. At this annual event, often referred to as the Oscars of the Drupal community, provides agencies with the opportunity to submit their outstanding #Drupal projects.

This year, the awards featured a total of 28 projects. There was great excitement when the winners in 8 different categories were announced. Each category recognised both a winner and a deserving runner-up. In addition, every year the jury honours special projects close to their hearts with the "Honorable Mentions".

1xINTERNET continues its winning streak

Since their introduction in German-speaking countries in 2017, the Splash Awards have become a benchmark for outstanding Drupal projects.

This year, 1xINTERNET has once again impressed the jury with its innovative solutions and creative projects and continued its success with both a first and a second place.

The Splash Awards are not only a recognition of hard work and outstanding dedication, but also a chance to network with industry peers, talk about the latest trends in web development and design, and celebrate the best solutions.

And the winner is…

The 2023 Splash Awards will be presented on November 10 in Mannheim, Germany. As every year, we look forward to presenting our work and hope to continue our success story this year. We congratulate all nominees and look forward to exchanging ideas with other experts in the Drupal community.

DrupalCon is the biggest event in the European Drupal community calendar, and this year, it will take place in Lille, France from October 17 to 20. We are excited to share that we are Platinum sponsors of the event, arriving with a big team from 1xINTERNET.

We're always excited about DrupalCon, it's one of the biggest events of the year. This year is extra special for us, we're celebrating our 10 year anniversary in December, that's 10 years of Drupal projects! We are bringing a big part of our team with us, showcasing our CMS with a "TRY DRUPAL" demo, giving you the chance to test out the foundation of our digital projects. Come and meet us at our booth in the exhibition hall!

We contributed the module Search API Decoupled to allow the creation of engaging search experiences with client-side Javascript applications.

In comparison to traditional server side search, client side solutions are much faster, because fetching and rendering search results is much more efficient then requesting full pages.

We released the backend functionality of Search API Decouple in Drupal Dev Days 2023 in Vienna, the slides and video are available online.

However, Drupal needs a fully working search client so that developers and marketers can easily evaluate the functionality for their projects.

Such a client should provide great search functionality out-of-the-box and should be easy to extend and style.

We applied for funding at the Drupal Innovation Contest Pitchburgh but we did not get it.

But we decided to build and contribute the functionality anyway.

I get offers almost every day from recruiters looking for Drupal talent. I appreciate sincere offers, but most fail in ways that are obvious to me as a candidate. I used to write a response to every offer, but that took too much time. Now I delete most offers as soon as I receive them. If you are a recruiter, this may baffle you. Why would I ignore your offer if I’m open to work? For recruiters who have been waiting to hear back from me, consider this my response. For recruiters who want to do better, here are some suggestions.

Know your target candidate

An experienced engineer who has been in a position for a long time has no reason to respond to a short term offer at an entry level pay rate. An entry level developer cannot meet requirements for years of experience with multiple versions of Drupal. You have to choose which kind of talent to recruit, or you will not reach anyone.

The most experienced candidates are usually well established in their jobs. If you want to recruit them, you need to understand what it takes for your job to be better than the one they already have. If you want to pay entry level rates, you need to offer candidates a path to obtaining the experience you require.

Blasting out a message with “Urgent” in the title and a long list of requirements shows high expectations, but I can’t imagine how it is supposed to attract either experienced or entry level candidates. Even worse is offering entry level pay but requiring qualifications that only an experienced candidate can meet. I will not be flattered that you think my experience is an exact match for your needs.

Be clear about what you are offering

Most of the offers I see completely fail to say what they are offering. Any decent offer starts with reasonable pay. I no longer respond to offers that do not include a target pay range. Disclosing a pay range does not weaken your negotiating position. You do not automatically have to pay the top of the range just because the candidate knows what it is. You and the candidate negotiate what the candidate will bring to the job to justify the top rate. If what the candidate offers does not justify being paid in the upper part of the pay range, you would pay in the lower part of the range.

If you contract with an agency you will have to pay about US$150 per hour for Drupal work. If you want to contract directly with an experienced developer, expect to pay at least US$80 per hour. If you are recruiting an established developer with a salary of US$150,000 per year with benefits, you need to offer more.

Money is not the only form of compensation that matters. Highlighting other forms of compensation helps your offer to stand out. These could include

- Time off

- Working remotely

- A supportive office culture

- Work-life balance

- Time to work on personal projects

- A clear plan for career advancement

Provide a path for entry level candidates

If the top of your pay range is US$60,000 a year, you need to open the position to entry level candidates. Instead of expecting candidates to show up with qualifications and experience, think about offering a path for them to obtain qualifications and experience. The need may be immediate, but wishing for experienced candidates who will work for entry level pay won’t make them appear. How can you provide a path to experience for entry level candidates?

The best way for a new candidate to obtain experience is to partner with a mentor.

- The Drupal community has an active mentorship program for new contributors. Some mentors may be willing to work with your candidates.

- If your company has experienced developers, assign them to mentor new candidates.

- Work with candidates from a company that provides training, such as DrupalEasy, Debug Academy, or EvolvingWeb.

Other ways you can give new candidates experience:

- Sponsor them to work on projects for non-profit organizations.

- Sponsor their contributions to open source projects related to their work.

Artificial intelligence is at a point where it can help developers boost their skills quickly. Providing access to artificial intelligence will help entry level candidates improve their productivity, but they need to know enough to check their work, find documentation, and collaborate with other developers. When access to code has to be protected, developers may not be able to use artificial intelligence.

Understand the role

Most job offers I see are getting better at this. Drupal work encompasses information architecture, site building, and front and back end coding. To get really good, most people have to focus on of these. The mythical full stack expert who does a complex, highly customized project all on his own in a short period of time is usually not available. If you cannot be specific about the type of work, you may not be ready to recruit candidates.

The project matters

It’s not just the work that matters, but what the project is and who it is for. Would I be proud to be working on this project? Do I respect the company? This is where you can really make your offer stand out from the rest.

- Is the company is a leader in their field?

- Will the project have a positive impact on my community?

- Will I be working with colleagues whose past work is widely respected?

- Is it an opportunity for me to apply my skills in a new field?

The personal touch

If you have done everything I have discussed so far, I am really going to be impressed with your offer. I may even pass it along to my talent network. If that was your goal, you can stop now. But if you want to guarantee that I will not only be impressed but also personally respond to your offer, there is one more thing you can do. Give it the personal touch.

What could make the job personally meaningful to me?

- Are there people I have worked with before who want to work with me again?

- Is the work closer to my area of interest than my current job?

- Does it support a cause I believe in?

- Is there any existing connection to me or my family?

- Does the company know about and want to support work I’ve been doing on my own?

If you add the personal touch to a good offer, you will definitely hear back from me, and I will give serious consideration to your offer.

If you need help implementing any of my suggestions for a position you are trying to fill, please contact me and I will do my best to help.

Jill Farley, Ken Rickard, and Byron Duvall discuss their experiences with the Cypress front-end testing framework.

We want to make your project a success.

Let's Chat.Podcast Links

Cypress.ioTranscript

George DeMet:

Hello and welcome to Plus Plus, the podcast from Palantir.net where we discuss what’s new and interesting in the world of open source technologies and agile methodologies. I’m your host, George DeMet.

Today, we’d like to bring you a conversation between Jill Farley, Ken Rickard, and Byron Duvall about the Cypress front end testing framework. Cypress is a tool that web developers use to catch potential bugs during the development process. It’s one of the ways we can ensure that we’re building quality products that meet our client’s needs and requirements.

So, even if you aren’t immersed in the world of automated testing, this conversation is well worth a listen. Without further ado, take it away, Jill, Ken, and Byron.

Jill Farley:

Hi, I'm Jill Farley from Palantir.net. I'm a senior web strategist and UX architect. Today, I'll be discussing Cypress testing with two of my colleagues. I'll let them introduce themselves, and then we can have a relaxed conversation about it.

Ken Rickard:

I'm Ken Rickard. I'm senior director of consulting here at Palantir.net.

Byron Duvall:

And I am Byron Duval. I'm a technical architect and senior engineer at Palantir.net.

Jill Farley:

Well, thanks for sitting down and talking with me today, you guys. We are going to maybe just start this off for anybody who doesn't know what Cypress automated testing is with a quick, maybe less technical overview of it. So, Ken, what are Cypress tests in 60 seconds?

Ken Rickard:

In 60 seconds, Cypress is a testing framework that is used to monitor the behavior of a website or app in real time within the browser, so it will let you set up test scenarios and record them and replay them so that you can guarantee that your application is doing what. You expect it to do when a user clicks on the big red button.

Jill Farley:

I did not time you, but that was brief enough. To give a little context for why we're talking about this today, our technical team, led by Ken, just recently developed a virtual event platform for one of our clients. Actually, it was developed a couple of years ago.

We've been iterating on it for a few years and it's unique in that it debuts for a few intense weeks each year. It's only live for a couple of weeks and specifically hosts this virtual event for four days, and then goes offline for the rest of the year. So, we have to get it right and we specifically have to get it right for the tens of thousands of visitors over the course of that four-day event that are coming.

So, this year we really went all in on Cypress testing to really ensure the success of the event.

So, Ken, I've heard you say we have 90% test coverage on this particular platform right now after the work that we've done. What does that mean? What does 90% test coverage mean?

Ken Rickard:

"It means we can sleep at night," I think, is what I mean when I say that. It’s simply that roughly 90% of the things that an individual user might try to do on the website are covered by tests. So, I joke a little bit about what happens when you press the big red button.

I mean, we have big orange buttons on the website, and the question becomes, "What happens when you press that button? Does it do the thing you expect it to do?" Also, the content and behavior of that button might change depending on whether or not we're pre-conference, we're during the conference, or we're during a specific session in the conference, and that changes again post-conference.

So, we have all of these conditions that change the way we expect the application to behave for our audience. I'll give you a simple example. During a session, the link of the session title, when you find it in a list, doesn't take you to the session page. It takes you to the video channel that's showing that session at that time. That is true for most sessions for a 30-minute window during the entire conference.

Our testing coverage is able to simulate that so we know, "Yep, during that 30-minute window, this link is going to go to the right place." So when we talk about that sort of 90% coverage, it means, from an engineering standpoint, well, even from a product management standpoint, you can look at the feature list and say, "Well, we have 300 features on this website and we can point to explicit tests for 270 of them."

Those numbers I just made up, but that gets you the point.

Jill Farley:

That sounds like an incredible amount of work to try to understand what to test and what types of tests to write. I'm actually going to go over to Byron for a second. As a member of the development team, what was it like actually writing these tests, creating them, and using them?

Up front, prior to them actually doing their job and covering our bases, if it was hard, let's talk about that.

Byron Duvall:

It was interesting and it was different because we use a different language for the testing. There's a lot of special keywords and things that you have to use in the testing framework, so just learning that was a bit of a curve.

Then, the biggest issue, I think we ran into with all of the testing was the timing of the test. The Cypress browser runs tests as fast as it can, and it runs faster than a human can click on all of the things. So, you start to see issues when the app doesn't have time to finish loading before the test is clicking on things. You have to really work to make sure that you have all of the right conditions for that to happen, and everything loaded, and you have to specifically wait on things.

I think that was the most challenging part. We usually had an idea of what we were looking for when we were writing a piece of functionality, what we were looking for it to do. That was kind of an easier part because we could write the click commands and write the test for what we're actually looking for in the return on the page.

So, that was the easiest part of it. The trickiest part was just the whole timing issue.

Jill Farley:

So Ken, how do we decide what to test if we're doing hundreds of tests? Is there ever really an end to what we can test or how do you do that prioritization?

Ken Rickard:

There is a theoretical end because we could theoretically cover every single combination of possibilities. You go for what's most important, and for what the showstopper bugs are. So, for instance, three simple examples of the first test we wrote, actually test #1: Do the pages that we expect to load, actually load? And do they have the titles that we expect them to have when we visit them?

Test #2: Those pages all exist in the navigation menu. Does the navigation menu contain the things we expect it to? And when you click on them, do they go to the pages we want them to? Also fairly simple.

Then we start to layer in the complexity because some of those menu items and pages are only accessible to certain types of users, certain types of conference attendees on the website. So you have to have a special permission or a pass to be able to see it.

So test #3 would say, "Well, we know that this page is only visible to people who are attending a conference in person. What happens when I try to hit that page when I'm not an in-person attendee? And does it behave the way we expect it to?"

So we start from the sort of show stoppers, right? Because if someone has paid extra money for an in-person ticket, but I let everyone view that page or don't treat that in-person user as special in some way, we're going to have angry clients and angry attendees. So we sort of test that piece first.

Then it's a question of, I would argue, testing the most complicated behaviors first. Like, what is the hardest thing? What is the thing most likely to go wrong that will embarrass us? And in that case, it's we have a whole bunch of functionality around adding and removing things to a personal schedule.

And we counted it up and over because we have pre-conference, during conference, post-conference there turned out to be 15 different states for every single session and we have tests that cover all fifteen of those states. So that we know what happens when you do, like I say, click the big something at the specific time.

So that's really how we break it down.

Jill Farley:

Makes tons of sense. Sounds like those are both, well, it brings new meaning to coverage. We're not just covering the functionality; we're kind of covering our butts too, making sure that, you know, we're not missing any of the big things that could really affect the attendee experience and the business, I guess, the business focus of the conference.

Ken Rickard:

Right. And the other thing too, the, well, those two other things as well. It does let us focus on what the actual requirements are because there are times when you go to write a test, you're like, "Well, wait a minute. I'm not sure what this thing should do if I click on it. Let's go back to the project team and find out." And we did that a number of times.

And then when you have something that's complex and time-sensitive, the biggest risk you run from a development standpoint, I think, is "Oh, we fixed issue A but caused issue B." So, you get a bug report. You fix that bug and it breaks something else.

Complete test coverage helps you avoid that problem. Because we broke tests a lot and you'd see a failing test and be like, "Oh wait, that thing I just touched actually has effects on other parts of the system." And so having those pieces again gives us a better product overall.

Jill Farley:

So test failures could be a good thing in some cases.

Ken Rickard:

Very much so. I actually was reviewing someone's work this morning and they had to change the test cases. I don't think they should have, based on the work they were doing. So, I was reviewing the pull request and I said, "Hey, why did you change this? This doesn't seem right to me because it indicates a behavior change that I don't think should exist."

Jill Farley:

Byron, I want to ask you. I know that you were involved in some of the performance work on this particular platform. What do you think? Did our Cypress tests in any way prevent some performance disasters? Or do you think that it's mostly about functionality? Like, do the two relate in any way?

Byron Duvall:

I don't think we had any tests that uncovered performance issues. I can't think of any specific example. It was mostly about the functionality and it was about avoiding regressions, like Ken was talking about. You change one thing to fix something, and then you break something else over here. I don't think that we had any instances where a performance bug would have been caught.

Ken Rickard:

I would say every once in a while. One of the things that Cypress does is it monitors everything your app is doing, including API requests. I think it came in handy in a couple of cases where we were making duplicate requests. So, we had to refactor a little bit. These were pretty small performance enhancements, so yeah, nothing big around infrastructure scaling or things like that.

But Cypress could catch a few things, particularly, I mean, if you're talking about a test loading slowly. It's like, “Oh, we have to wait for this page to load.” That can be indicative of a performance issue.

Byron Duvall:

Yeah, that's a good point. And then, the Cypress browser itself will show you every request that it's making, so you can tell if it's making lots of requests that you don't believe it should be making, or if it's making them at the wrong times. That could indeed be a way to uncover something, but it's really completely separate from the tool that we use to test performance outside of those other clues that you might get from Cypress testing.

Jill Farley:

So we've talked about Cypress testing and functionality. Can it test how things look? How things display?

Ken Rickard:

It can. But it's not a visual testing tool. It's not going to compare screenshot A to screenshot B, but we could write specific tests for markup structure in the HTML. For example, does this class exist inside this other class? It does have some tools for testing CSS properties, which we use in a few cases. Jill, you'll remember this. Are we using the right color yellow in one instance? Thus, we have an explicit test for, “Hey, is this text that color?”

Jill Farley:

That's the that would have been the big disaster of the event is if it wasn't the right color yellow.

Ken Rickard:

So, we do have a few of those which are visual tests, but they are not visual difference tests. That's a whole different matter. However, you can write a test to validate that. Let's revert to my previous example: the big orange button is, in fact, orange.

Jill Farley:

Just a couple more questions. Let's start with: What are a few ways that going all in on Cypress testing this year got in our way? Perhaps there's something we might do a little differently next time to streamline this, or maybe it's always going to get in our way, but it's worth it.

Ken Rickard:

I think the answer is, it can be painful, but it's worth it. The fundamental issues we had when we push things up to GitHub and then into CircleCI for continuous integration testing is that we don't have as much control over the performance and timing of the CircleCI running of the app as we do when we're running it locally. So tests that pass routinely locally might fail on CircleCI. That took us a long time to figure out. There are ways to get around that problem, which we are using.

The other significant issue is that to do tests properly, you have to have what are called data fixtures. These simply mean snapshots of what the website content looks like at certain points in time. They're called fixtures because they are fixed at a point in time, so they should not change. But because we were transitioning from last year's version of this application to this year's, there was a point where we had to change the content in our fixtures. We're actually about to experience that next week when we transition from the test fixtures we were using to a new set of fixtures, which contain the actual data from the conference - all of the sessions, all of the speakers, all of it.

Updating that is a massive amount of work. So being able to rely on things like, "Hey, I want to test that Jill Farley's speaker name comes across as Jill Farley," means we have to make sure that the content we have maps to that.

Jill Farley:

Is it fair to say that incorporating this into our development process, again, it's worth it, but it slows it down?

Ken Rickard:

I don't think it overall slowed us down. I believe overall, it might have increased our efficiency.

Byron Duvall:

Indeed, I believe it did increase our efficiency. It allows us to avoid a lot of manual point-and-click testing on things when we're done. We can do some development, write a test or have someone else write the test, and use that tool to do our manual testing while we're coding instead of just sitting there pointing and clicking. Even creating test data, if we have the fixtures in there like Ken was talking about, is a big help as well.

One of the things that did slow us down, however, was being able to differentiate between a timing or an expected failure versus an actual problem or regression with the code. There were instances where we didn't recognize which type of failure it was. We changed the test, but then we inadvertently broke something else in the app, so we should have paid more attention to that specific test failure.

When you're trying to troubleshoot various types of failures, timing failures, and things that may differ on CircleCI or just fail intermittently, figuring out whether it's a real problem or not can slow you down. It can also kind of defeat the purpose of testing as well.

Ken Rickard:

Right. In normal operation, which I would say we're in about 90% of the time, it simply means that on a given piece of work we're doing, we're changing just one thing, and that's the only thing we need to focus on. We can trust the tests to cover everything else. So, we can be assured that nothing else broke. The question then becomes, do we have a good new test for this thing that just got added?

Sometimes, I conduct pull request reviews without actually checking out the code and running it locally. I can just look at it and think, "OK, I see what you did here. You're testing here. You didn't break anything. OK." That's acceptable and, actually, it's a great feeling.

Jill Farley:

This is probably the last question. We've talked a lot about the benefits to the development process and kind of gotten into the specifics of how to do it. I'm curious for anyone who's considering incorporating this into their process, are there key, you know, kind of maybe two to three benefits from a business perspective or a client perspective? Why take the time to do this? I can actually think of one from a business side. I was sort of the delivery manager on this project, and in layman's terms, the manual QA process on the client side, once we sort of demoed this work to them, was so much shorter. I mean, last year, when we weren't doing this, there was a lot more pressure on the human point and click testing, like Byron was saying, not just on our development team side but on the client side as well. So as they're reviewing the functionality and really testing our work to see if it's ready for prime time, the development of a safer testing process really decreased the amount that we found during the final QA phases. It was really nice to sit back at that point and say, "Yeah, we've covered all of our bases." So Ken, what would you say are the biggest business benefits to incorporating Cypress testing into a product?

Ken Rickard:

The biggest business benefits, I would say, are getting a better definition of what a feature actually does because the developers have to implement a test that covers that feature. This creates a good feedback loop with the product team regarding definition. Another significant factor that derails projects is either new feature requests that come in at inappropriate times or regressions caused by making one change that accidentally breaks multiple things we were unaware of. You witnessed such incidents in past projects when we didn't have test coverage, but now we don't have to deal with that anymore. Those are the big ones.

It also occurred to me, as you were talking about the client, that one of the nice things about Cypress is that it records all the tests it runs and generates video files. Although we didn't share those with the client, we could have sent them the videos and said, "Okay, we just finished this feature. Here's how it plays. Can you make sure this covers all your scenarios?" They could watch the video and provide feedback. This potential is significant from a business standpoint because it allows for various asynchronous testing. It's funny because when you play back the Cypress videos, you actually have to set them to play at around 1/4 speed, otherwise, it's hard to follow along.

Jill Farley:

So that question I had about does this slow us down? It sounds like we make up the time.

Ken Rickard: